A Large Portion Of Your Google Analytics Traffic Might Be Fake

9 October 2017, Remco Van Der Beek

How to detect Mozilla Compatible Agent traffic from bots.

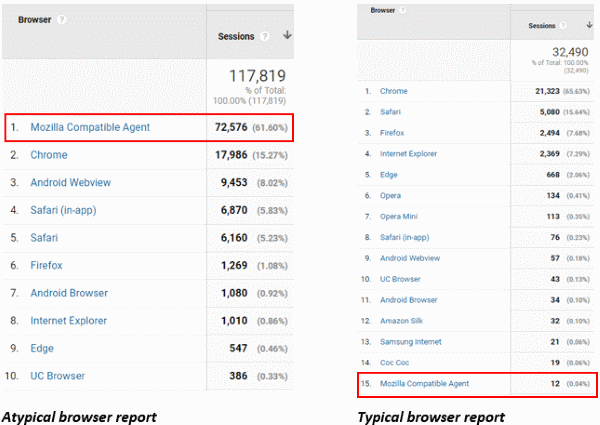

Whilst auditing the Google Analytics account of one of our clients recently, I came across a curious thing. The GA browser report showed that more than 60% of sessions occurred via the browser Mozilla Compatible Agent.

In most Google Analytics accounts Chrome, Safari, Firefox and good old IE typically tend to top the browser list, and Mozilla Compatible Agent represents a tiny fraction of the total traffic. But in the case at hand, over 60% of traffic came via this browser. My curiosity was triggered immediately. Time to get my analyst hat on and dig deeper!

First, I tried to understand how this traffic entered the site; via which traffic channel that is, using the secondary dimension Default Channel Grouping. Much to my surprise, nearly all of that Mozilla Compatible Agent traffic seemed to be Direct traffic from new users. And all of that traffic had a bounce rate of 100%, because only 1 page was viewed, which of course led to a dwell time of 0 seconds. Hmmmm…

Next, I looked at the geographical origin of that traffic, and concluded that nearly all of that traffic came from one and the same city. And it wasn’t London, Manchester or any other big city that you would expect to top the list. Suspense…

I then analysed via which service provider all that traffic reached the site. It turned out nearly all of it came via a single service provider called ‘customer lan subnet’!

So, we have over 60% of site traffic coming via an otherwise small browser, all hitting the site directly from one and the same city, via one and the same service provider. And, in all these sessions, only one page was viewed. Something smells very, very dodgy…

Having experienced similar behaviour with another client recently, we found out that monitoring was installed by the company’s risk auditors. This automated routine constantly pinged different pages on the site, and then left immediately.

Something similar seems to be going on here. Although we cannot be a 100% sure of the origin of this traffic, we are confident that this sudden spike in traffic, with all of the characteristics described above, is caused by bots which emulate the user agent Mozilla Compatible Agent. The only alternative explanation is a sudden rise in mobile traffic where MCA can be a genuine user agent, which for sure is not the case here.

How to assess your analytics are impacted by an MCA bot issue?

If you see a sudden spike in traffic to your website, check which browser and service provider combinations are sending traffic, as illustrated above. If you see a lot of traffic coming via MCA, and a particular service provider (this can also be the name of an organisation, e.g. Microsoft), you more than likely have a bot traffic issue on your hands.

How can you deal with a Mozilla Compatible Agent issue?

In the case at hand, the bot filtering option in Google Analytics had been checked. This was obviously not enough to keep this bot traffic away.

What you need to do is twofold:

- Create a custom filter to permanently remove traffic from that particular service provider, in this case ‘customer lan subnet’, from your data. This will remove any traffic from that bot going forward.

- Since retroactive removal is not possible using filters, we recommend you also create a segment to filter traffic from that particular service provider from your reports. This segment will also work retroactively.

PS: If you want to go all the way, you can modify your Google Analytics tracking code such that it does not even collect data from that Mozilla Compatible Agent. This requires a little coding knowledge. Be aware though that some of that MCA traffic might be legitimate, which will not be collected in that case.

-

Jason

-

Remco Van Der Beek

-

Jason